Lecture 21: A Bounded Queue

Reminder

Proof of Concept for final project due this Friday.

Overview

- Testing Previous Queues

- A Bounded Queue

- Stacks

Previously

- Unbounded Queue with Locks

- lock

enqanddeqmethods

- lock

- Lock-free Unbounded Queue

- use

AtomicReferenceforhead,tail,next - use

compareAndSetto validate & update atomically - update

head/tailproactively- guard against incomplete

deq/enq

- guard against incomplete

- use

Lock-free Dequeue Method

public T deq() throws EmptyException {

while (true) {

Node first = head.get();

Node last = tail.get();

Node next = first.next.get();

if (first == head.get()) {

if (first == last) {

if (next == null) {

throw new EmptyException();

}

tail.compareAndSet(last, next);

} else {

T value = next.value;

if (head.compareAndSet(first, next))

return value;

}

}

}

}

Testing our Queues

Bounded Queues

- Both previous queues were unbounded

- Sometimes we want bounded queues:

- have limited space

- want to force tighter synchronization between producers & consumers

How can we implement a bounded queue (with locks)?

One Option

Keep track of size!

- Start with our

UnboundedQueue- lock

enqanddeqmethods

- lock

- Add a

final int capacityfield - Add an

AtomicInteger sizefield- increment upon

enq - decrement upon

deq

- increment upon

- Make sure

size <= capacity

Why should size be atomic?

Enqueue Method

- acquires

enqLock - if

sizeis less than capacity- enqueue item

- increment size

- release lock

- otherwise

- throw exception? (total method)

- wait until

size < capacity? (partial method)

Dequeue Method

- acquires

deqLock - if

sizeis greater than0- dequeue item

- decrement size

- release lock

- otherwise

- throw exception? (total method)

- wait until

size > 0? (partial method)

An Unexceptional Queue

Suppose we don’t want to throw exceptions

- Full/empty queue operations are expected, not exceptional

- Queue should handle these cases by having threads wait

Question: How might we implement this behavior?

Enqueue with Waiting

public void enq (T value) {

enqLock.lock();

try {

Node nd = new Node(value);

while (size.get() == capacity) { }; // wait until not full

tail.next = nd;

tail = nd;

} finally {

enqLock.unlock();

}

}

A Problem?

This is wasteful!

while (size.get() == capacity) { }; // wait until not full

The thread:

- Acquires lock

- Fails to enqueue while holding lock

- Uses resources to repeatedly check condition

size.get() == capacity

What if it takes a while until the queue is not full?

A More Prudent Way

The following would be better:

- enqueuer sees that

size.get() == capacity - enqueuer temporarily gives up lock

- enqueuer passively waits for the condition that

size.get() < capacity- not constantly checking

- dequeuer sees that enqueuers are waiting

- after dequeue, dequeuer notifies waiting enqueuers

- enqueuer acquires lock, enqueues

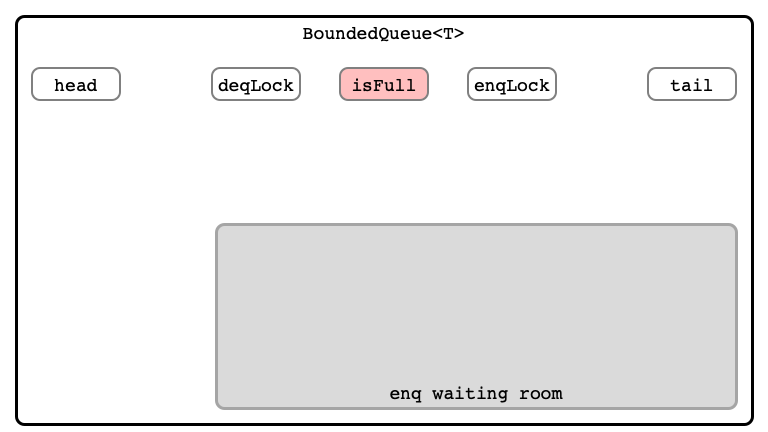

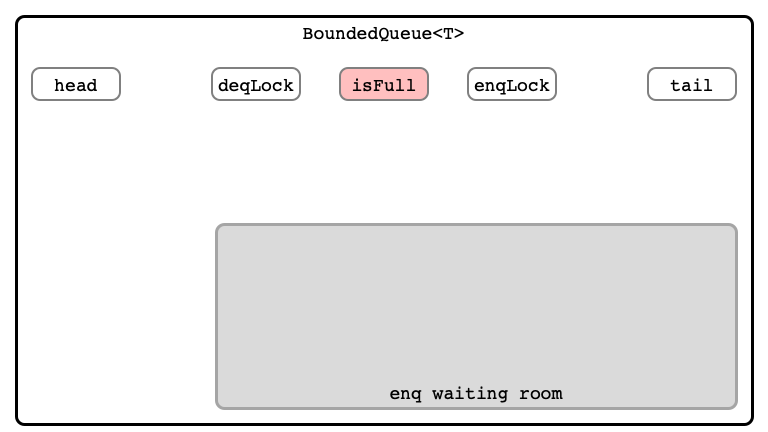

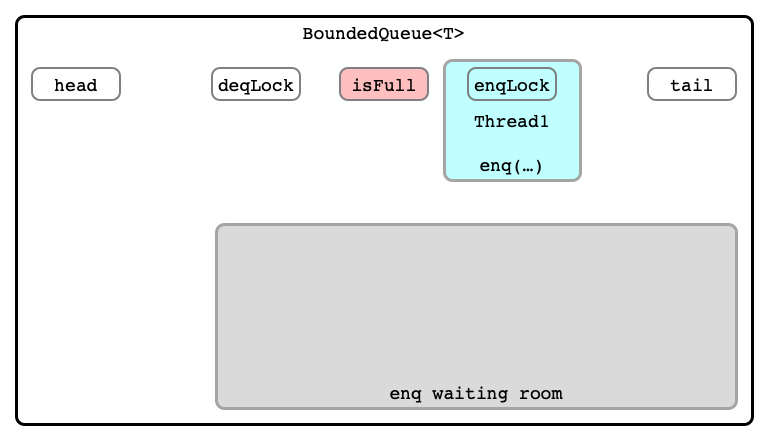

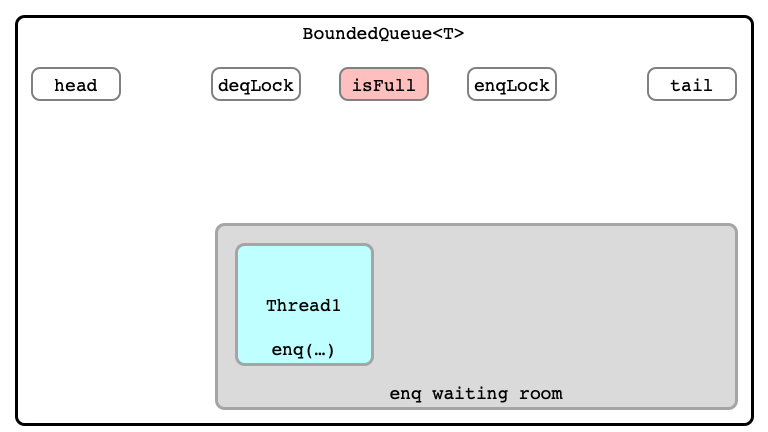

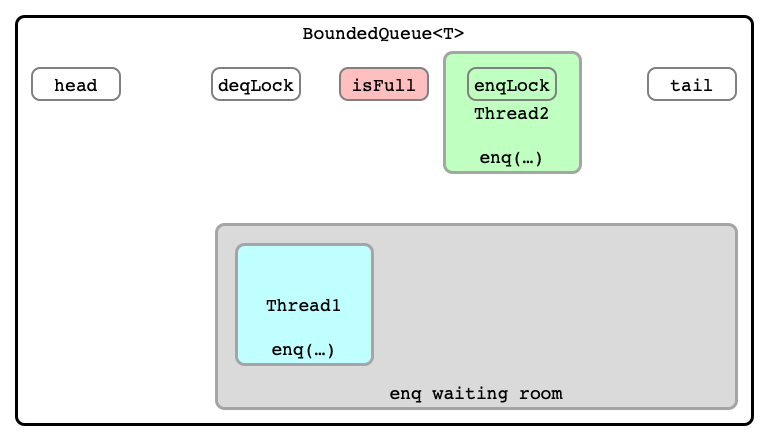

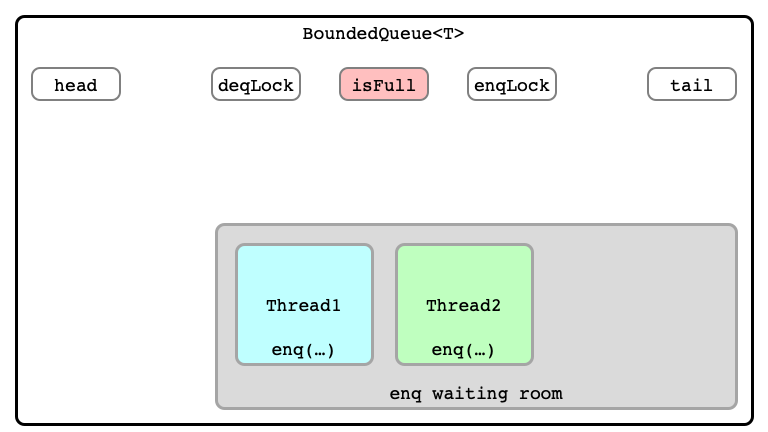

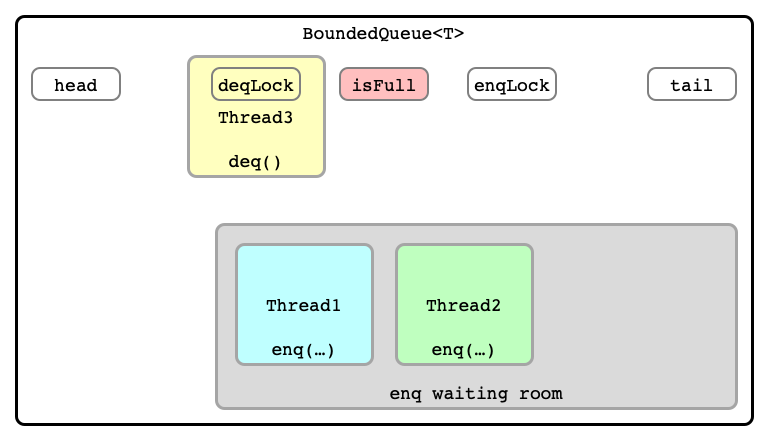

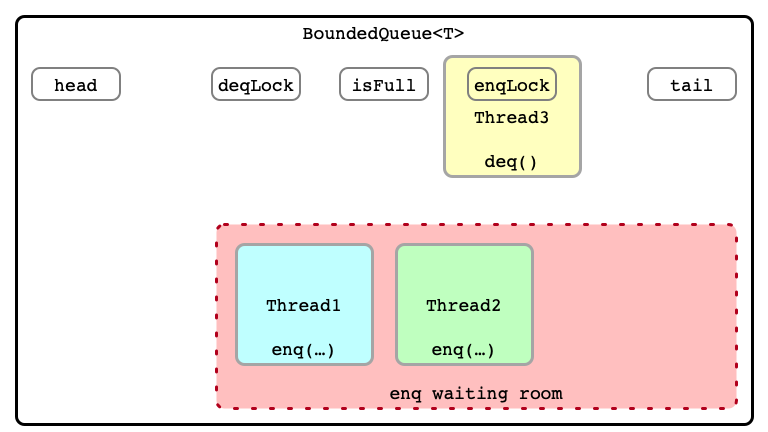

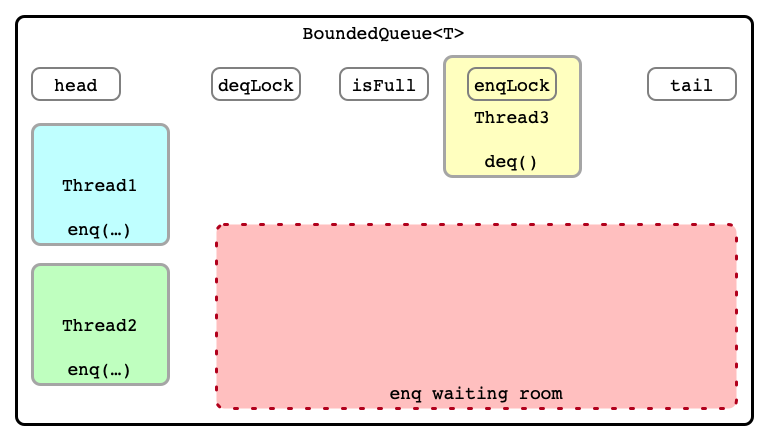

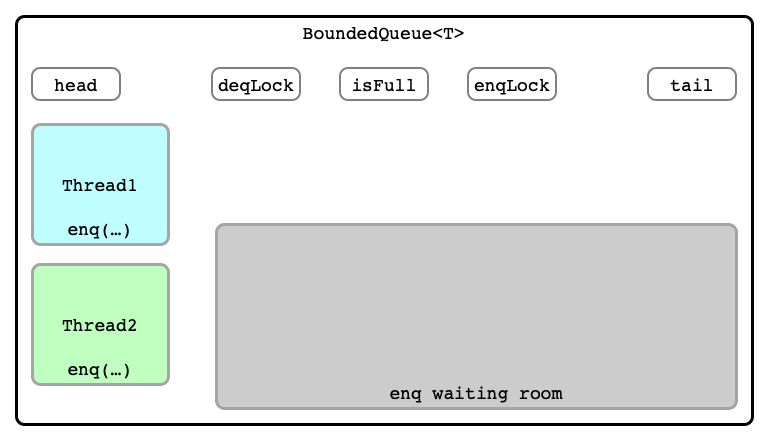

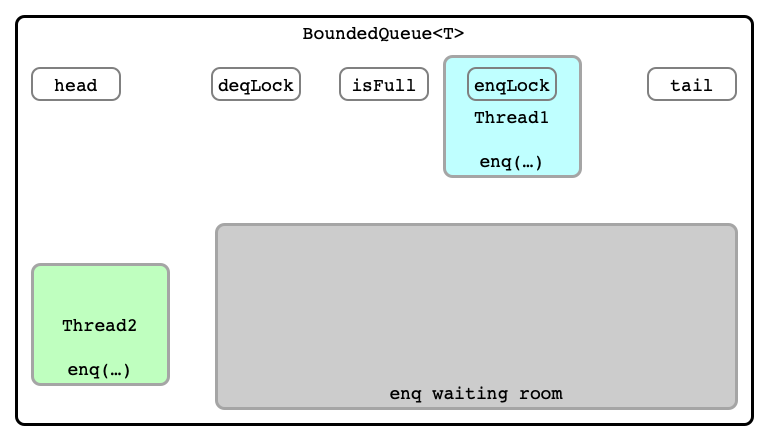

A Waiting Room Analogy

Queue Full

Enqueuer Arrives, Acquires Lock

Enqueuer Sees Full, Waits

Enqueuer Arrives, Acquires Lock

Enqueuer Sees Full, Waits

Dequeuer Arrives, Sees Full, Dequeues

Dequeuer Announces No Longer Full

Enqueuers Leaving Waiting Room

Dequeuer Releases Lock

Enqueuer Locks; Enqueue Ensues

The Lock and Condition Interfaces

The Lock interface defines a curious method:

-

Condition newCondition()returns a new Condition instance that is bound to this Lock instance

The Condition interface

-

void await()causes the current thread to wait until it is signalled or interrupted -

void signal()wakes up one waiting thread -

void signalAll()wakes up all waiting threads

Improving our Queue

Define condition for enqLock

notFullCondition

When enqueuing to a full queue

- thread signals that it is waiting

- need a flag (

volatile boolean) for this

- need a flag (

- thread calls

notFullCondition.await()- waits until

notFullConditionis satisfied

- waits until

When Dequeueing

When dequeueing

- thread checks if thread is waiting

- checks flag for this

- if so, after dequeue, dequeuer calls

notFullCondition.signalAll()- signals that queue is no longer full

(Similar: notEmptyCondition for deq method)

Making a BoundedQueue

public class BoundedQueue<T> implements SimpleQueue<T> {

ReentrantLock enqLock, deqLock;

Condition notEmptyCondition, notFullCondition;

AtomicInteger size;

volatile Node head, tail;

final int capacity;

public BoundedQueue(int capacity) {

this.capacity = capacity;

this.head = new Node(null);

this.tail = this.head;

this.size = new AtomicInteger(0);

this.enqLock = new ReentrantLock();

this.notFullCondition = this.enqLock.newCondition();

this.deqLock = new ReentrantLock();

this.notEmptyCondition = this.deqLock.newCondition();

}

public void enq(T item) { ... }

public T deq() { ... }

class Node { ... }

}

Enqueueing

public void enq(T item) {

boolean mustWakeDequeuers = false;

Node nd = new Node(item);

enqLock.lock();

try {

while (size.get() == capacity) {

try {

// System.out.println("Queue full!");

notFullCondition.await();

} catch (InterruptedException e) {

// do nothing

}

}

tail.next = nd;

tail = nd;

if (size.getAndIncrement() == 0) {

mustWakeDequeuers = true;

}

} finally {

enqLock.unlock();

}

if (mustWakeDequeuers) {

deqLock.lock();

try {

notEmptyCondition.signalAll();

} finally {

deqLock.unlock();

}

}

}

Dequeueing

public T deq() {

T item;

boolean mustWakeEnqueuers = false;

deqLock.lock();

try {

while (head.next == null) {

try {

// System.out.println("Queue empty!");

notEmptyCondition.await();

} catch(InterruptedException e) {

//do nothing

}

}

item = head.next.item;

head = head.next;

if (size.getAndDecrement() == capacity) {

mustWakeEnqueuers = true;

}

} finally {

deqLock.unlock();

}

if (mustWakeEnqueuers) {

enqLock.lock();

try {

notFullCondition.signalAll();

} finally {

enqLock.unlock();

}

}

return item;

}

Testing the Queue

For Your Consideration

What if we want to make a lock-free bounded queue?

- Can we just add

sizeandcapacityfields to ourLockFreeQueue?

Stacks

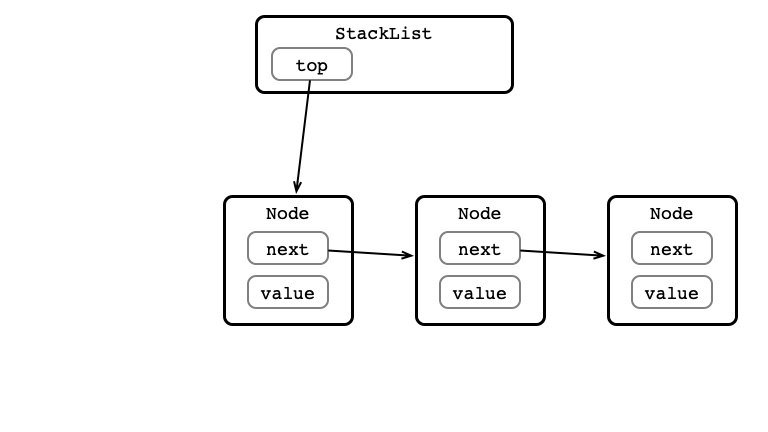

Recall the Stack

Basic operations

-

void push(T item)add a new item to the top of the stack -

T pop()remove top item from the stack and return it- throw

EmptyExceptionif stack was empty

- throw

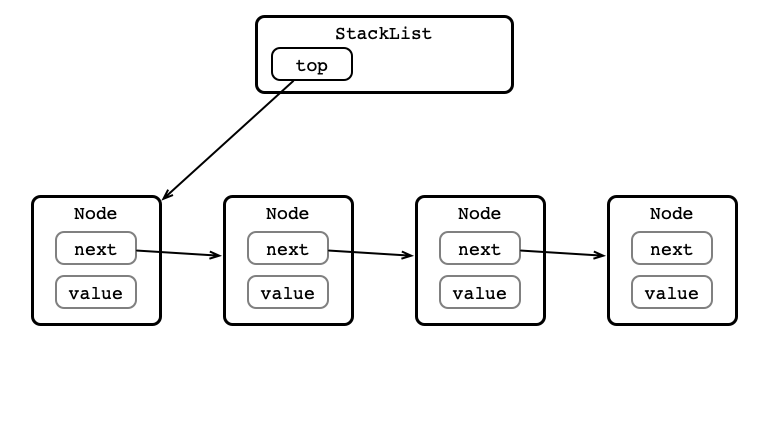

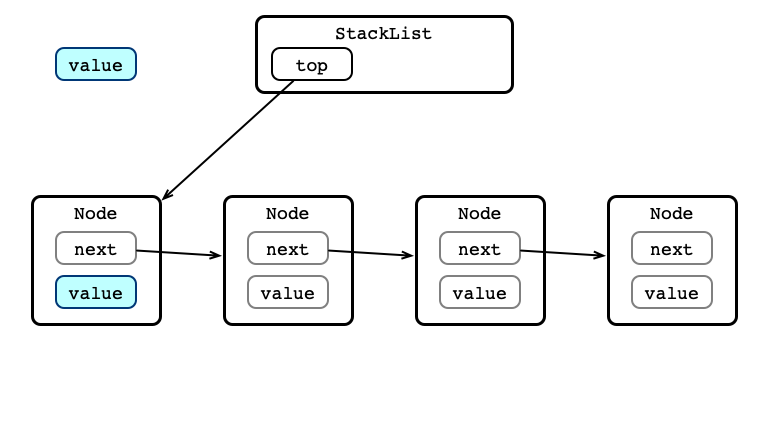

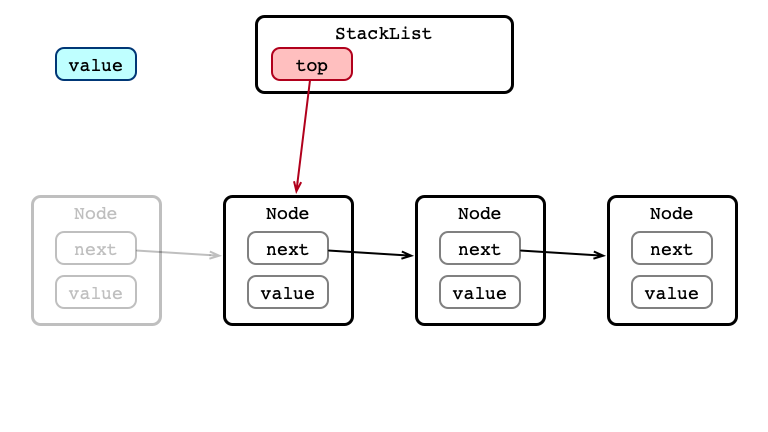

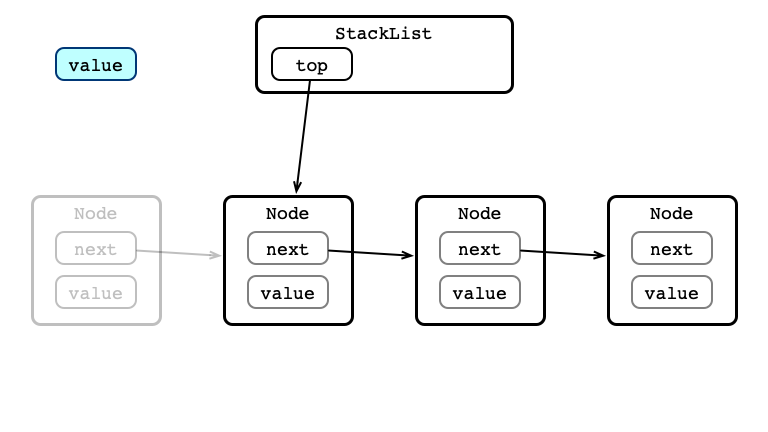

Linked List Implementation

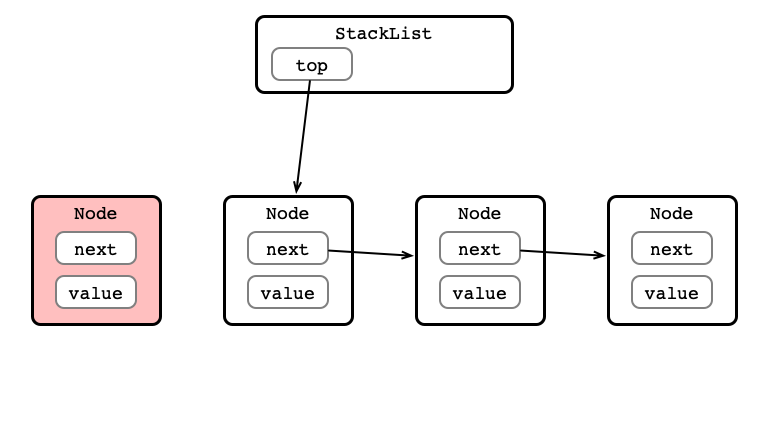

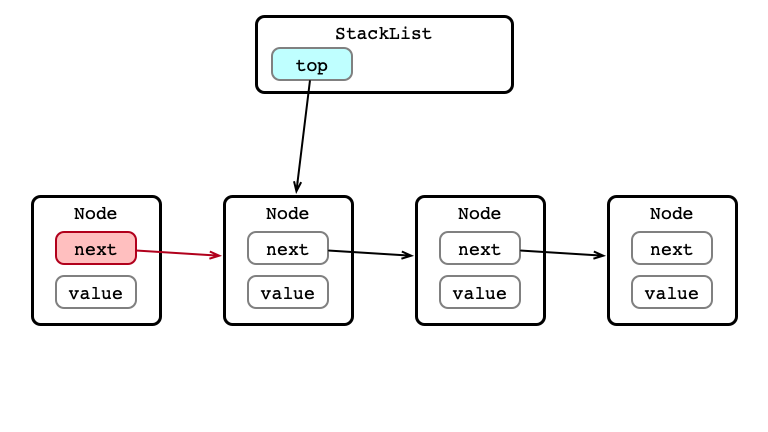

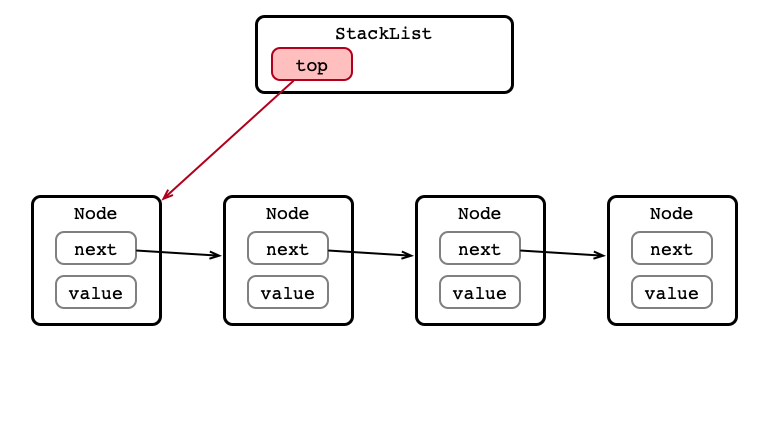

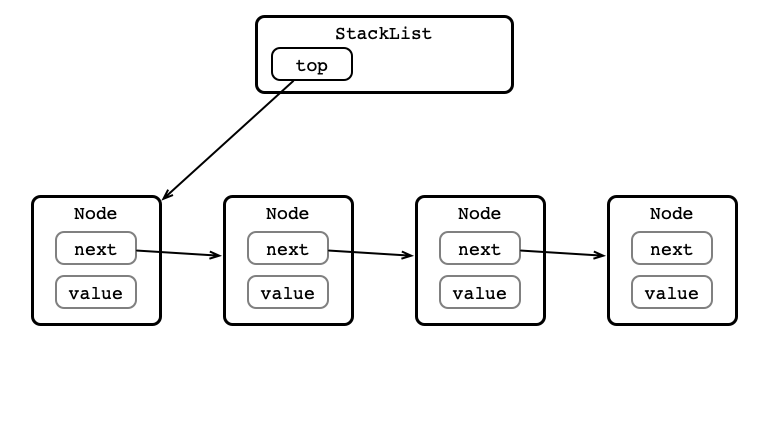

push() Step 1: Create Node

push() Step 2: Set next

push() Step 3: Set head

push() Complete

pop()?

pop() Step 1: Store value

pop() Step 2: Update head

pop() Step 3: Return value

Concurrent Stack

With locks:

- Since all operations modify

head, coarse locking is natural choice

Without locks?

A Lock-free Stack

Use linked-list implementation

- Logic is simpler than queues’ because all operations affect same node

- Idea:

- store

topas anAtomicReference<Node> - use

compareAndSetto modifytop- success, or retry

- store

- Unlike queue:

- item on top of stack precisely when

toppoints to item’sNode

- item on top of stack precisely when

Implementing the Lock-Free Stack

public class LockFreeStack<T> implements SimpleStack<T> {

AtomicReference<Node> top = new AtomicReference<Node>(null);

public void push(T item) {...}

public T pop() throws EmptyException {...}

class Node {

public T value;

public AtomicReference<Node> next;

public Node(T value) {

this.value = value;

this.next = new AtomicReference<Node>(null);

}

}

}

Implementing push

public void push(T item) {

Node nd = new Node(item);

Node oldTop = top.get();

nd.next.set(oldTop);

while (!top.compareAndSet(oldTop, nd)) {

oldTop = top.get();

nd.next.set(oldTop);

}

}

Implementing pop

public T pop() throws EmptyException {

while (true) {

Node oldTop = top.get();

if (oldTop == null) {

throw new EmptyException();

}

Node newTop = oldTop.next.get();

if (top.compareAndSet(oldTop, newTop)) {

return oldTop.value;

}

}

}

Sequential Bottleneck

Modifying top

- No matter how many threads,

push/poprate limited bytop.compareAndSet(...) - This seems inherent to any stack…

… or is it?

Elimination

Consider several concurrent accesses to a stack:

- T1 calls

stk.push(item1) - T2 calls

stk.push(item2) - T3 calls

stk.pop() - T4 calls

stk.push(item4) - T5 calls

stk.pop() - T6 calls

stk.pop()

Trick Question. What is the state of stk after these calls?

What do we need the stack for?

Match and exchange!

Cut out the middleperson!

A Different Strategy

- Attempt to

push/popto stack- if success, good job

- If attempt fails, try to find a partner

- if

push, try to find apopand give them your value - if

pop, try to find apushand take their value

- if

Next time: implement an exchange object to facilitate this