Lecture 17: More Linked Lists

Overview

- Review: Coarse- and Fine-grained Lists

- Optimistic List

- Lazy List

Announcements

- Comments on proposals posted

- see shared Google drive

- please consider/respond to comments

-

forthcoming lab/hw assignments are optional

- can be submitted by end of finals week

- lowest grades dropped

- Quizzes weeks of 4/26, 5/3, 5/10, 5/17

- no comprehensive exam

- Final project steps

- Proof of concept due 5/7

- Short video due 5/19

- Final submission due 5/28

Last Time

A Set of elements:

- store a collection of distinct elements

-

addan element- no effect if element already there

-

removean element- no effect if not present

- check if set

containsan element

An Interface

public interface SimpleSet<T> {

/*

* Add an element to the SimpleSet. Returns true if the element

* was not already in the set.

*/

boolean add(T x);

/*

* Remove an element from the SimpleSet. Returns true if the

* element was previously in the set.

*/

boolean remove(T x);

/*

* Test if a given element is contained in the set.

*/

boolean contains(T x);

}

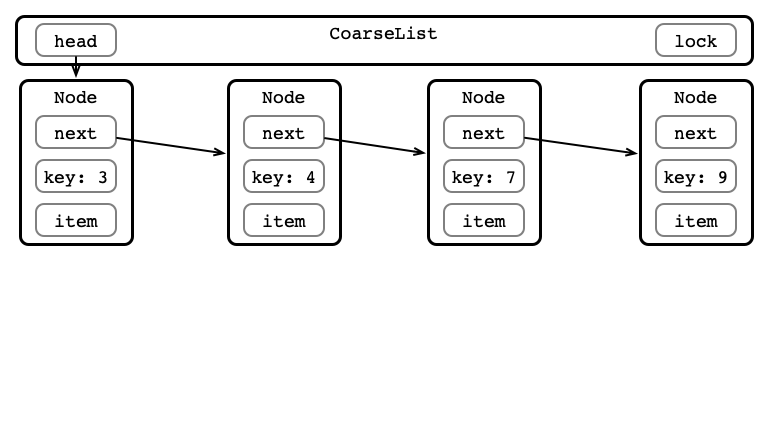

Coarse-grained Locking

One lock for whole data structure

For any operation:

- Lock entire list

- Perform operation

- Unlock list

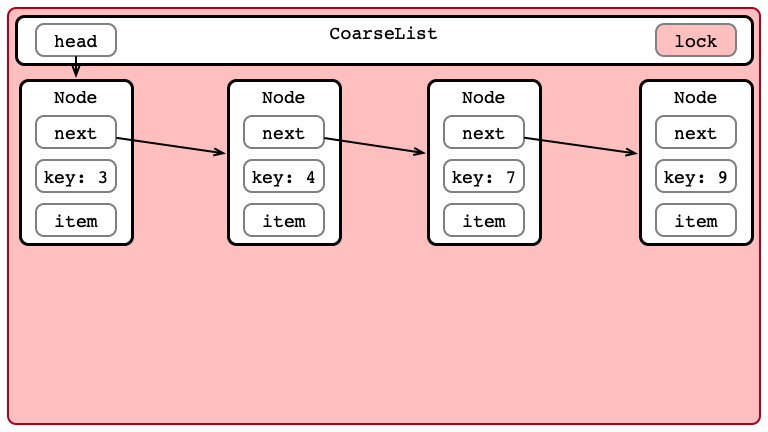

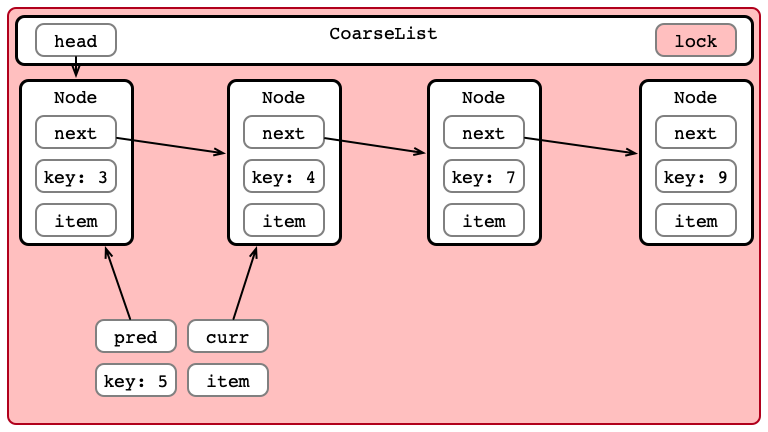

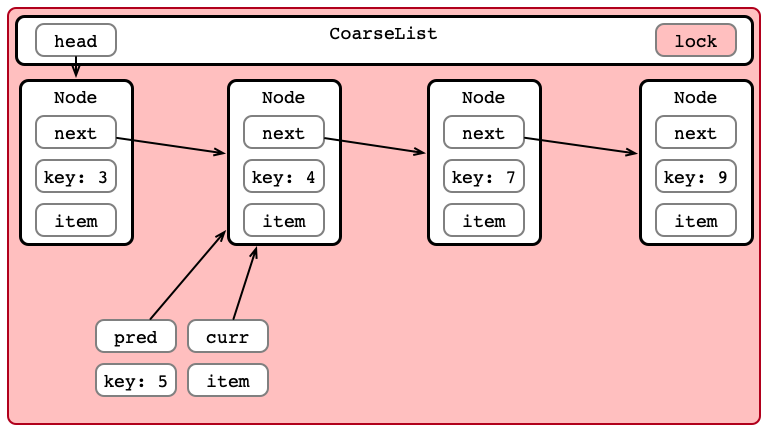

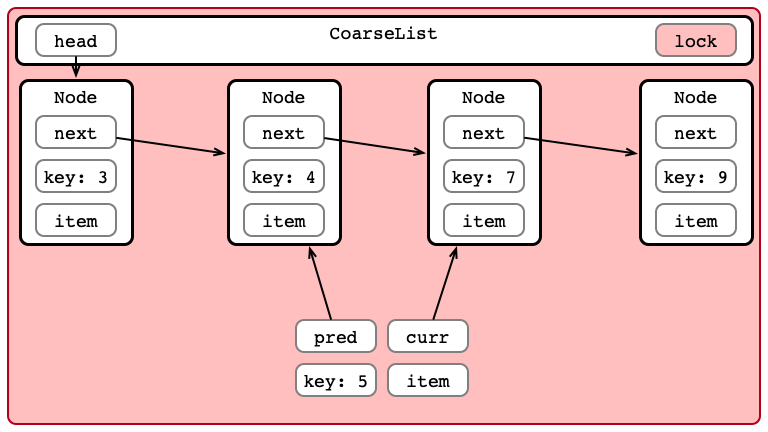

Coarse-grained Insertion

Step 1: Acquire Lock

Step 2: Iterate to Find Location

Step 2: Iterate to Find Location

Step 2: Iterate to Find Location

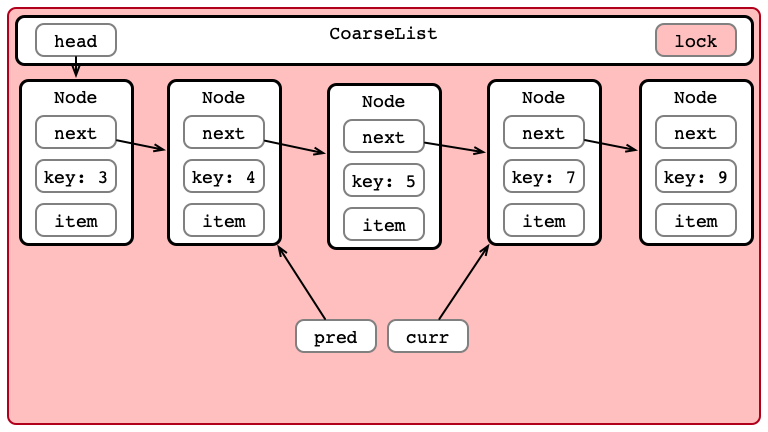

Step 3: Insert Item

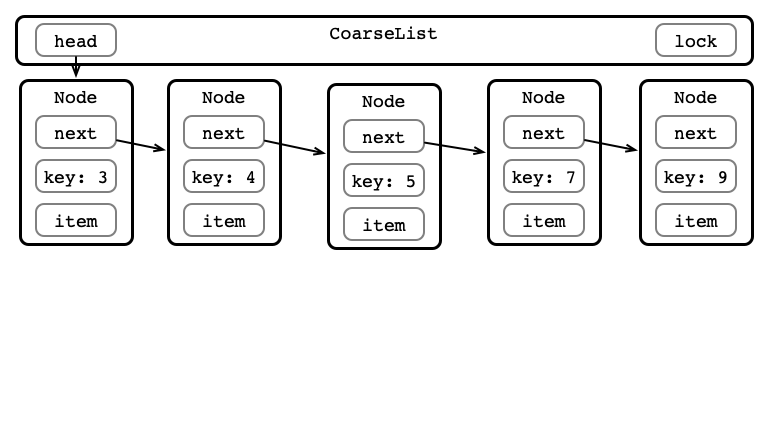

Step 4: Unlock List

Coarse-grained Appraisal

Advantages:

- Easy to reason about

- Easy to implement

Disadvantages:

- No parallelism

- All operations are blocking

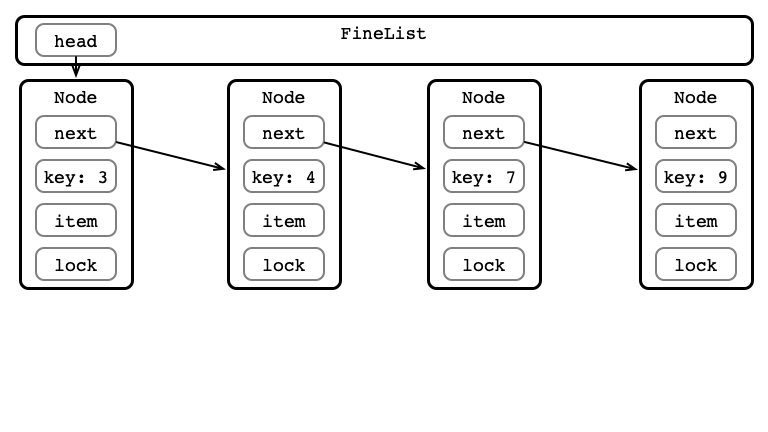

Fine-grained Locking

One lock per node

For any operation:

- Lock head and its next

- Hand-over-hand locking while searching

- always hold at least one lock

- Perform operation

- Release locks

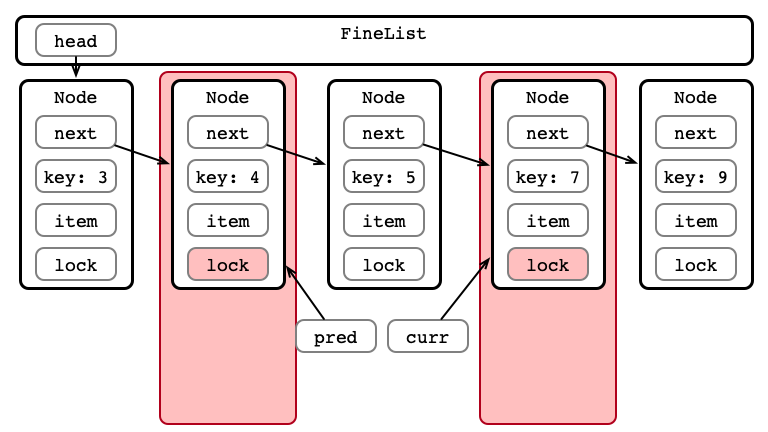

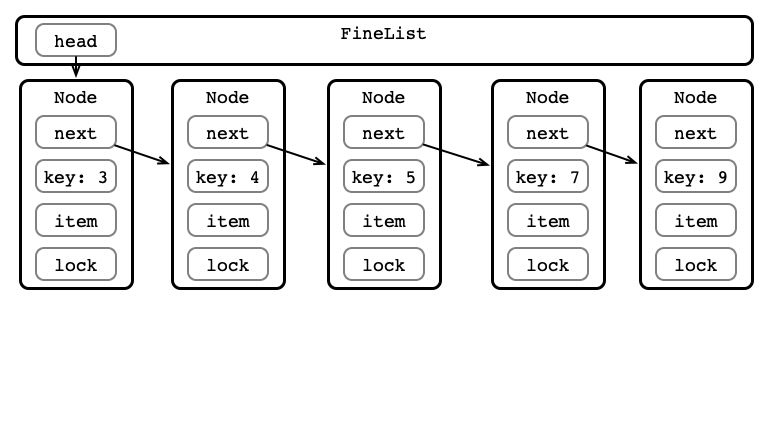

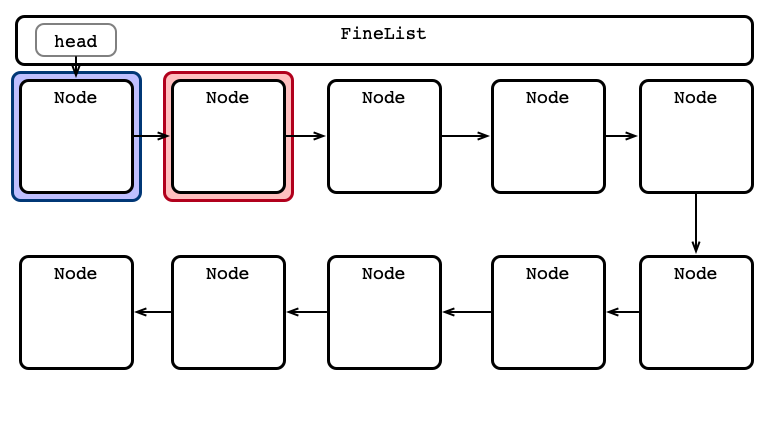

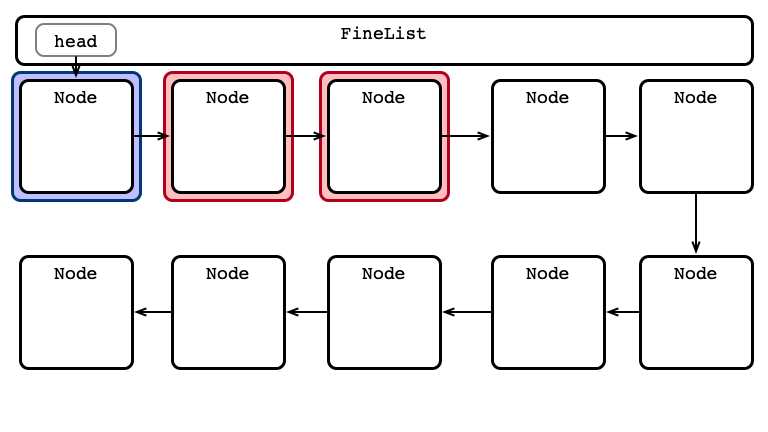

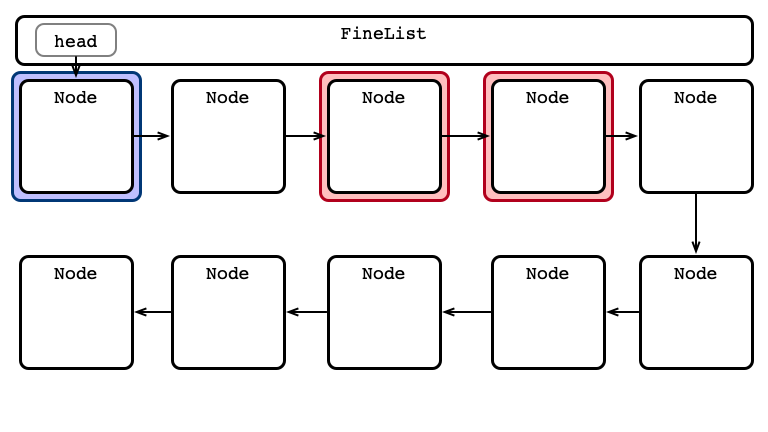

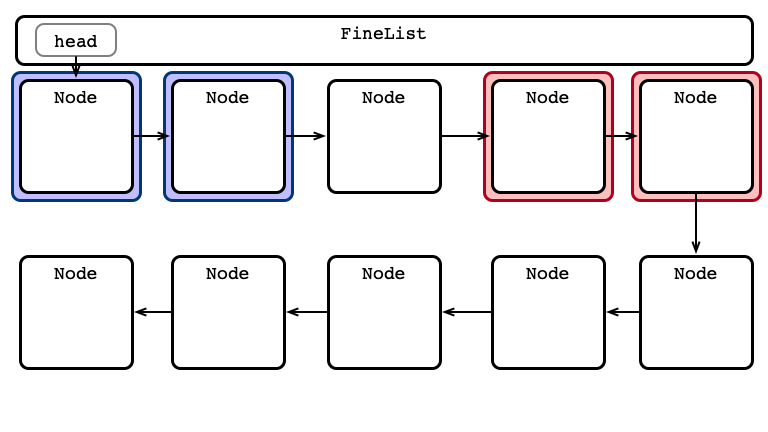

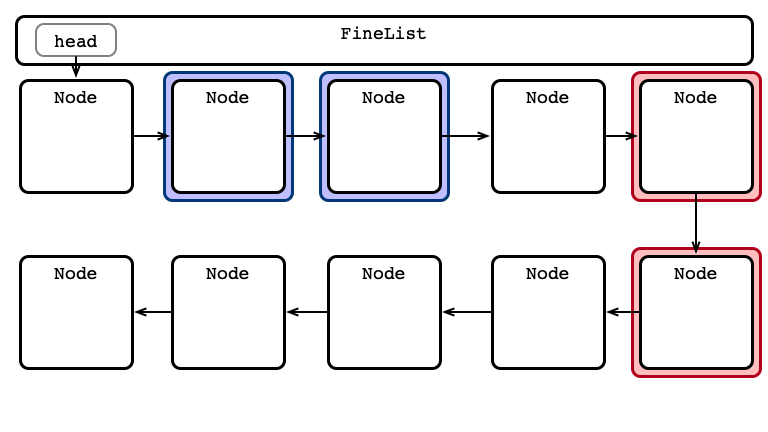

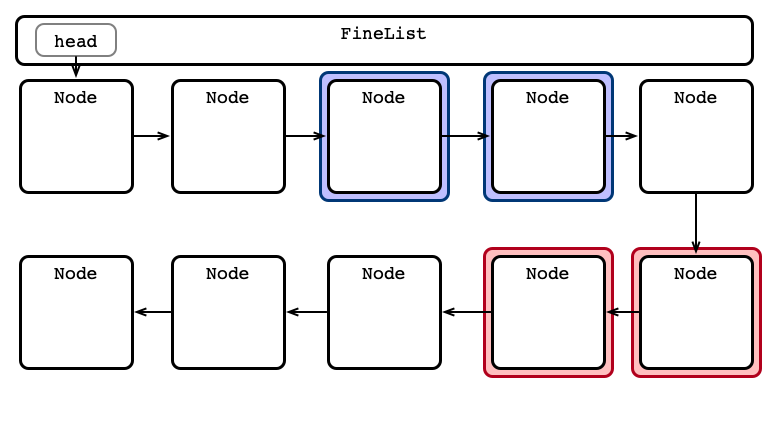

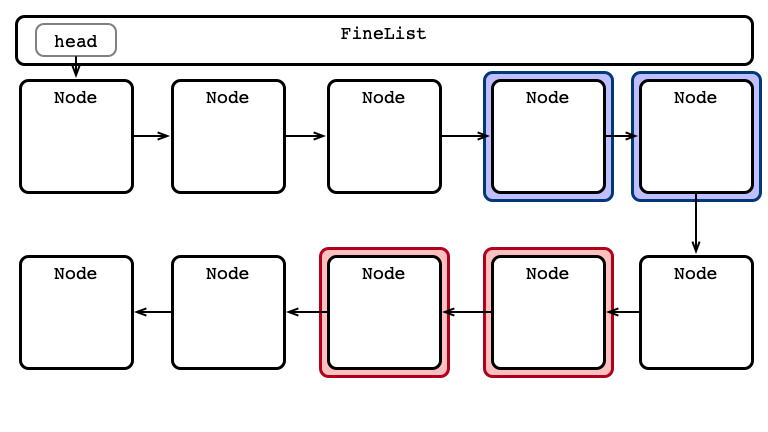

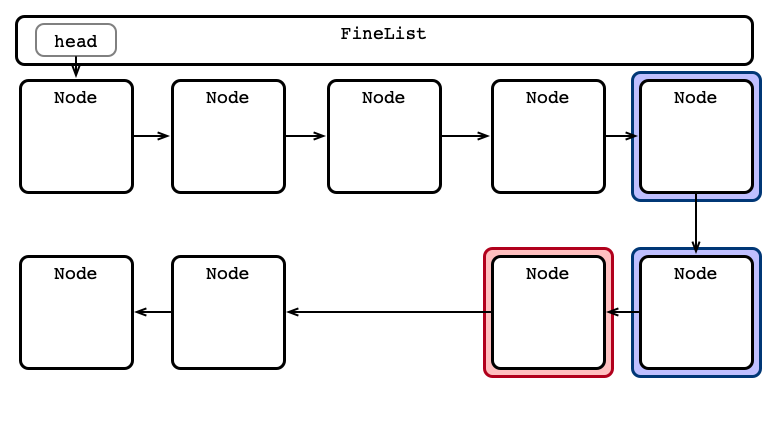

A Fine-grained Insertion

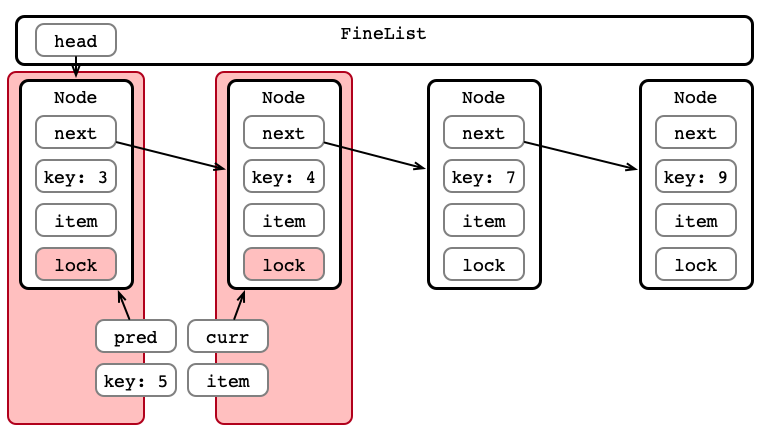

Step 1: Lock Initial Nodes

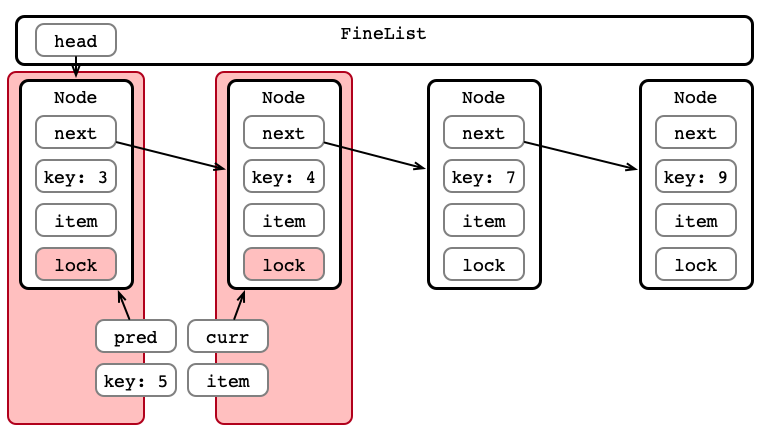

Step 2: Hand-over-hand Locking

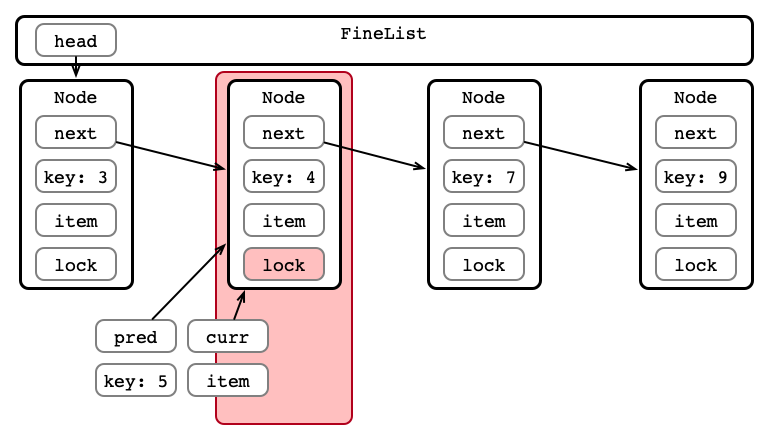

Step 2: Hand-over-hand Locking

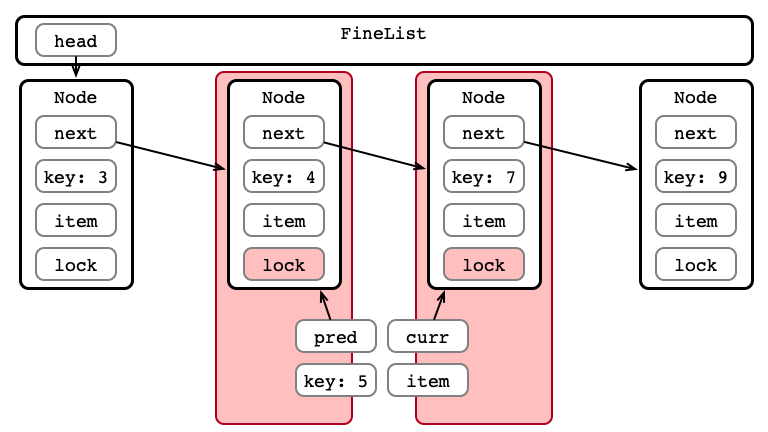

Step 2: Hand-over-hand Locking

Step 3: Perform Insertion

Step 4: Unlock Nodes

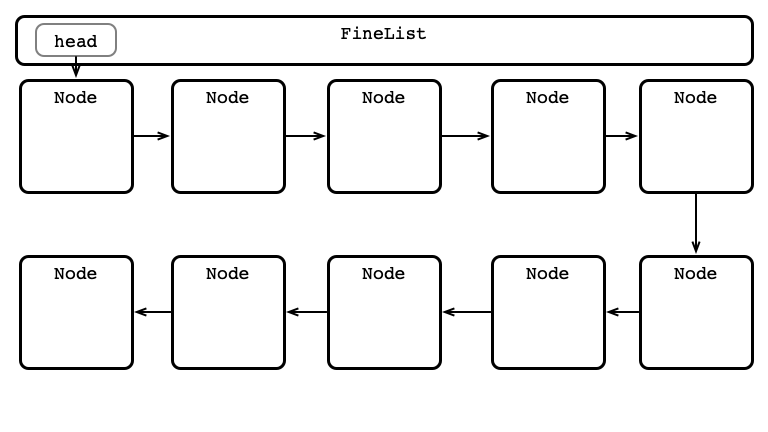

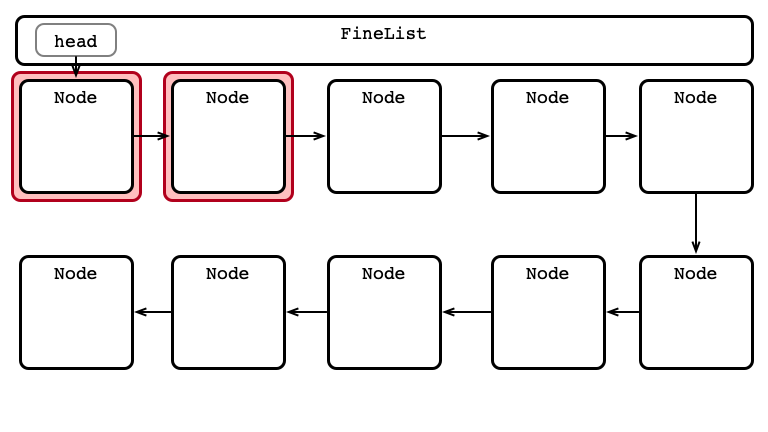

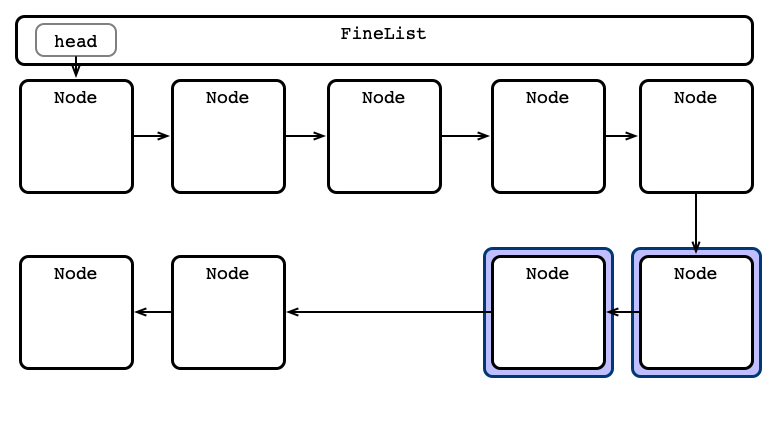

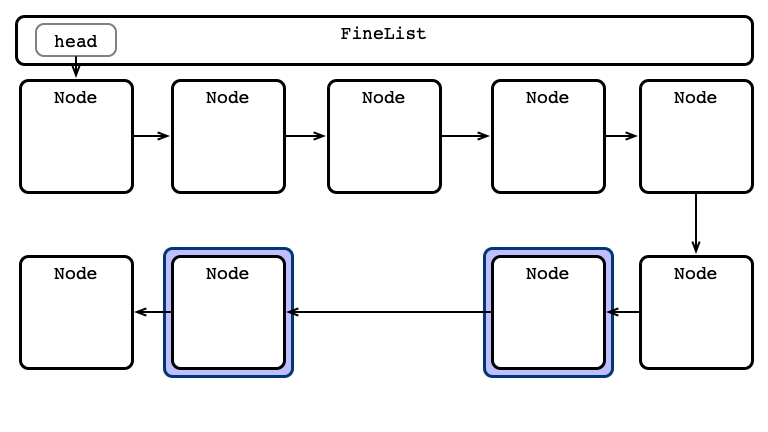

An Advantage: Parallel Access

An Advantage: Parallel Access

An Advantage: Parallel Access

An Advantage: Parallel Access

An Advantage: Parallel Access

An Advantage: Parallel Access

An Advantage: Parallel Access

An Advantage: Parallel Access

An Advantage: Parallel Access

An Advantage: Parallel Access

An Advantage: Parallel Access

An Advantage: Parallel Access

Fine-grained Appraisal

Advantages:

- Parallel access

- Reasonably simple implementation

Disadvantages:

- More locking overhead

- can be much slower than coarse-grained

- All operations are blocking

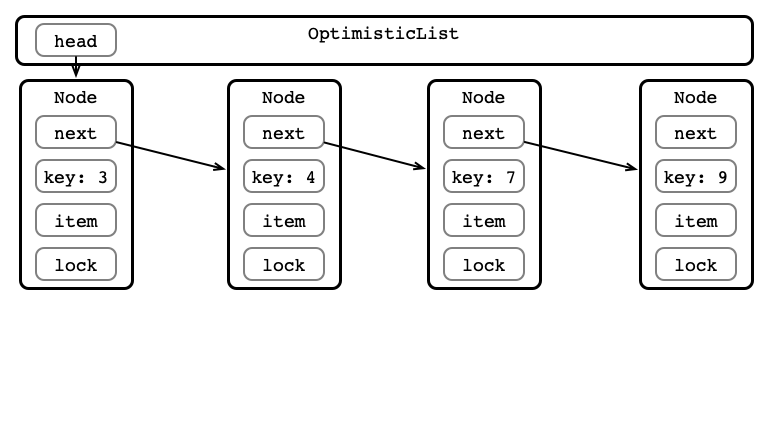

Optimistic Synchronization

Fine-grained wastes resources locking

- Nodes are locked when traversed

- Locked even if not modified!

A better procedure?

- Traverse without locking

- Lock relevant nodes

- Perform operation

- Unlock nodes

A Better Way?

A Better Way?

A Better Way?

A Better Way?

A Better Way?

A Better Way?

What Could Go Wrong?

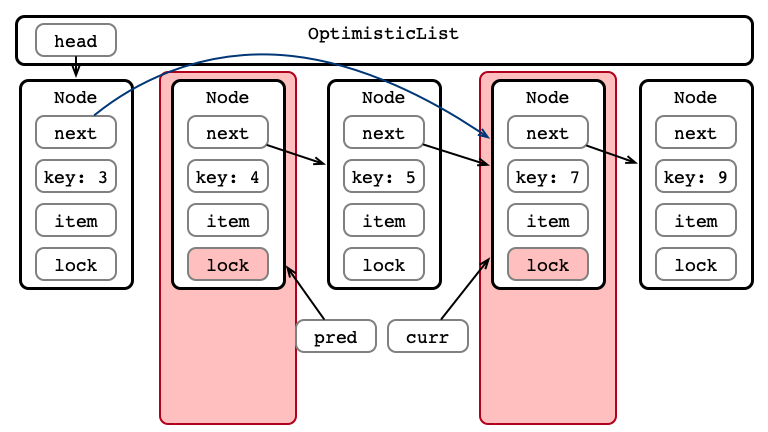

An Issue!

Between traversing and locking

- Another thread modifies the list

- Now locked nodes aren’t the right nodes!

An Issue, Illustrated

An Issue, Illustrated

An Issue, Illustrated

An Issue, Illustrated

An Issue, Illustrated

An Issue, Illustrated

How can we Address this Issue?

Optimistic Synchronization, Validated

- Traverse without locking

- Lock relevant nodes

-

Validate list

- if validation fails, go back to Step 1

- Perform operation

- Unlock nodes

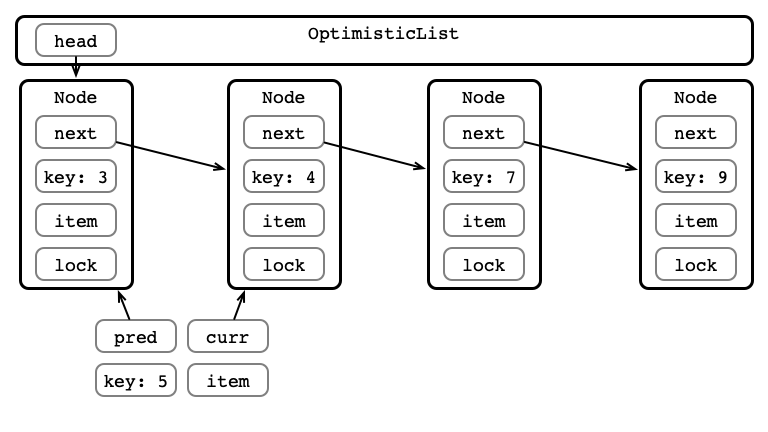

How do we Validate?

After locking, ensure that:

-

predis reachable fromhead -

currispred’s successor

If these conditions aren’t met:

- Start over!

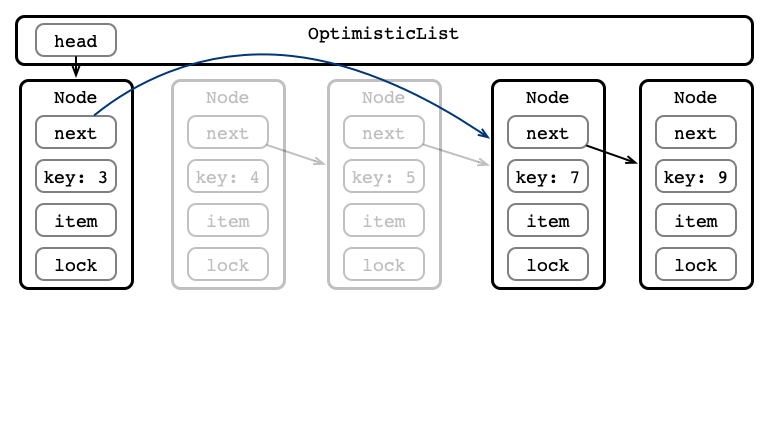

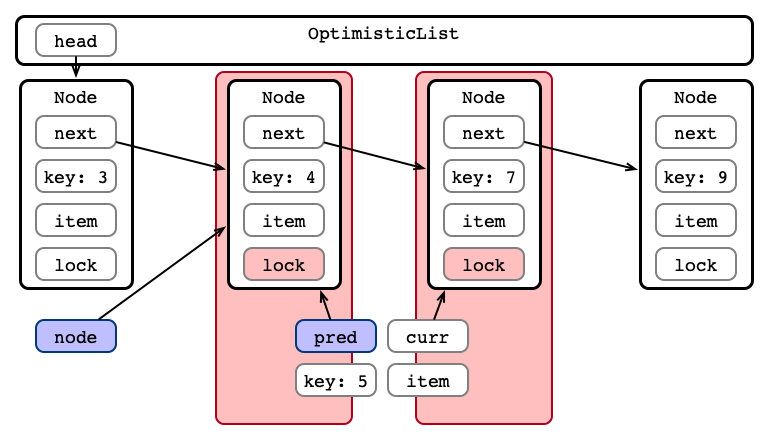

Optimistic Insertion

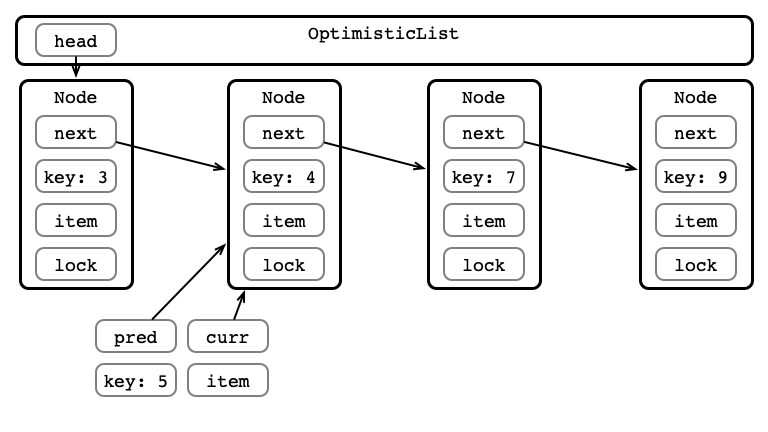

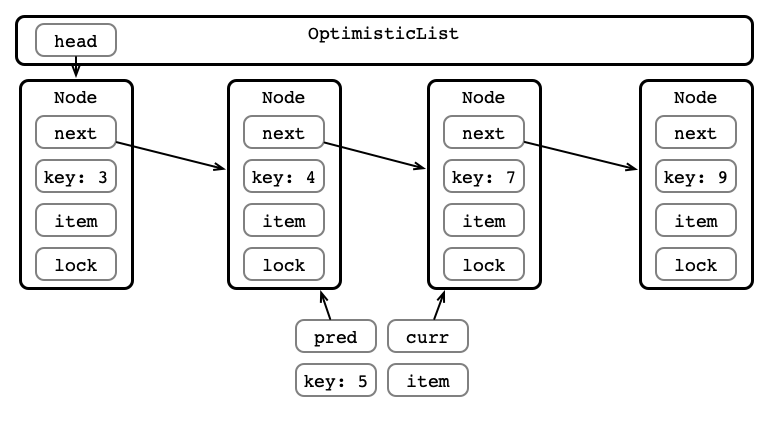

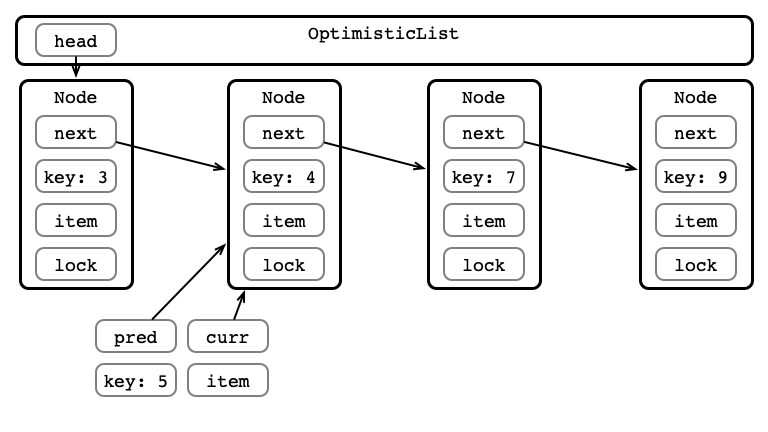

Step 1: Traverse the List

Step 1: Traverse the List

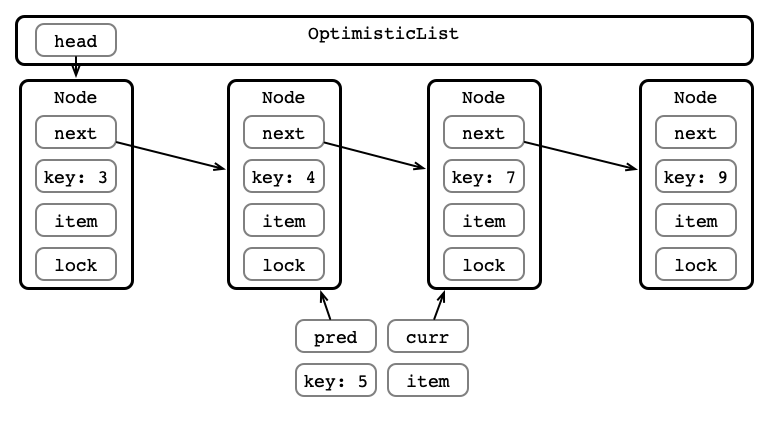

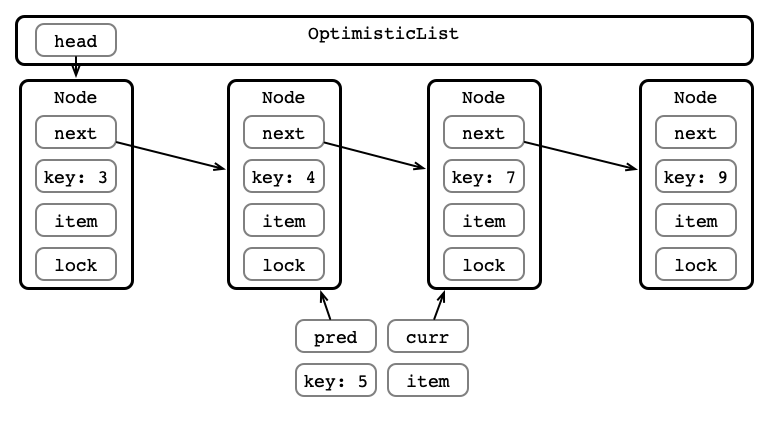

Step 1: Traverse the List

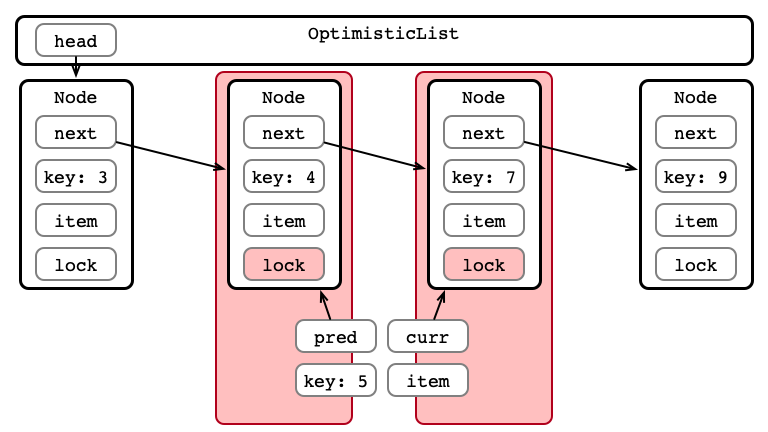

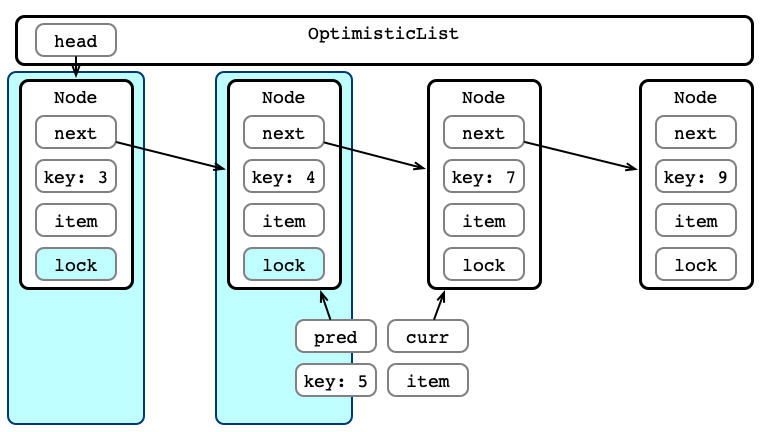

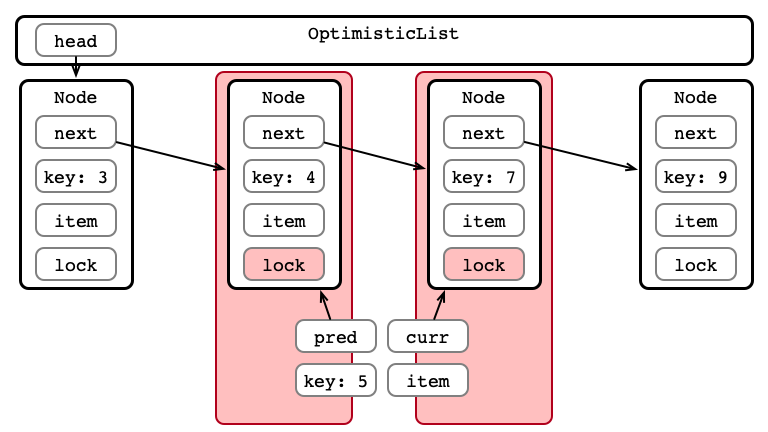

Step 2: Acquire Locks

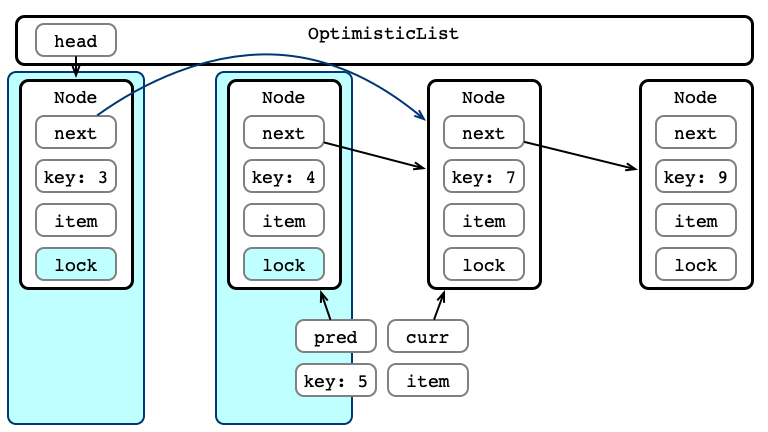

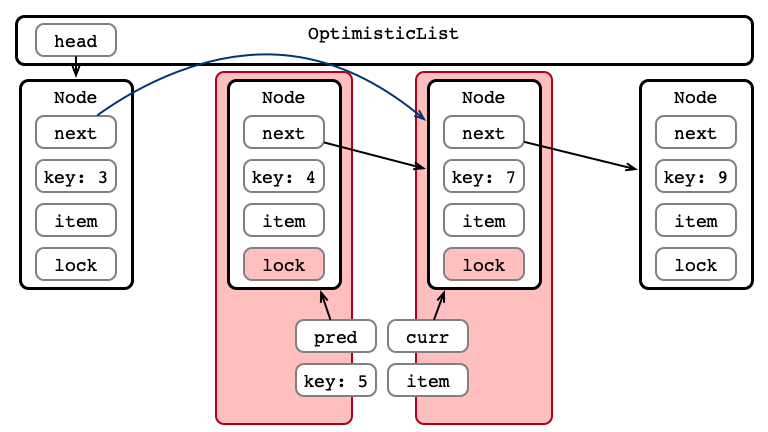

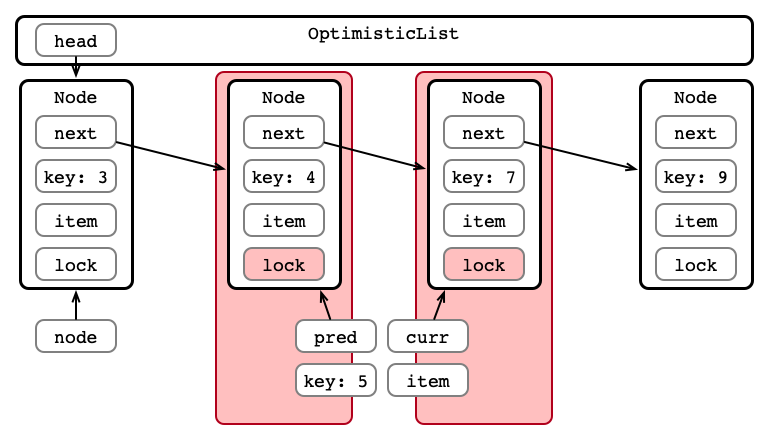

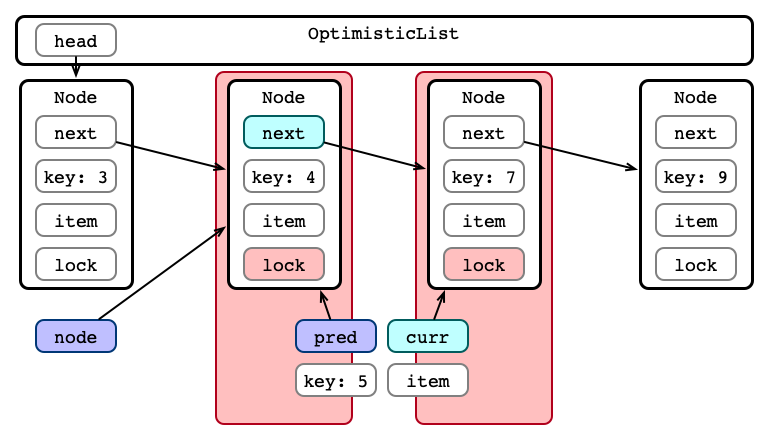

Step 3: Validate List - Traverse

Step 3: Validate List - pred Reachable?

Step 3: Validate List - Is curr next?

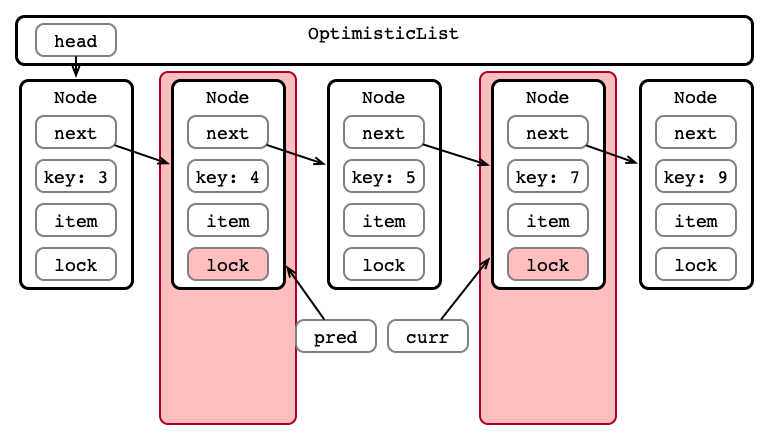

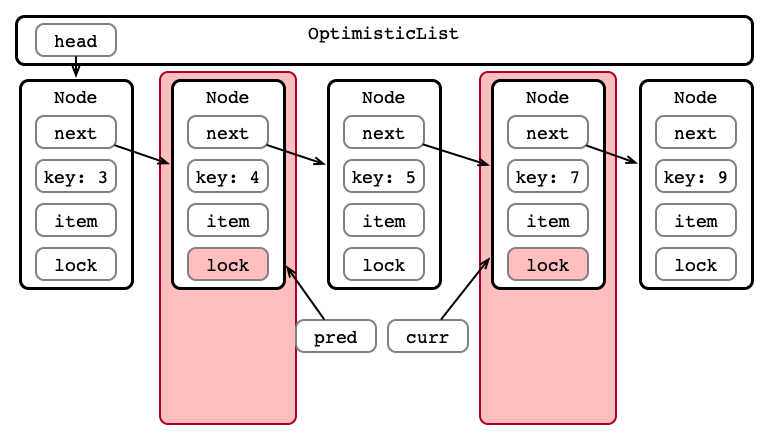

Step 4: Perform Insertion

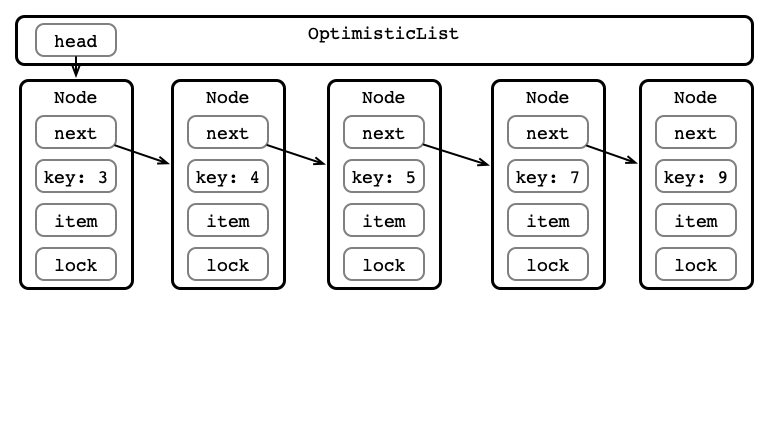

Step 5: Release Locks

Implementing Validation

private boolean validate (Node pred, Node curr) {

Node node = head;

while (node.key <= pred.key) {

if (node == pred) {

return pred.next == curr;

}

node = node.next;

}

return false;

}

Question

Under what conditions might optimistic synchronization be fast/slow?

Testing Optimistic Synchronization

Optimistic Appraisal

Advantages:

- Less locking than fine-grained

- More opportunities for parallelism than coarse-grained

Disadvantages:

- Validation could fail

- Not starvation-free

- even if locks are starvation-free

So Far

All operations have been blocking

- Each method call locks a portion of the data structre

- Method calls lock out other calls

- True even for

contains()calls- doesn’t modify the data structure

Observation

Operations are complicated because they consist of several steps

- hard to reason about when the operation appears to take place

- coarse/fine-grained synchronization stop other threads from seeing operations “in progress”

- optimistic synchronization may encounter “in progress” operations before locking

- validation required

Lazy Synchronization

-

Mark a node before physical removal

- marked nodes are logically removed, still physically present

- Only marked nodes are ever removed

Validation simplified:

- Just check if nodes are marked

- No need to traverse whole list!

Lazy Operation

- Traverse without locking

- Lock relevant nodes

- Validate list

- check nodes are

- not marked

- correct relationship

- if validation fails, go back to Step 1

- check nodes are

- Perform operation

- for removal, mark node first

- Unlock nodes

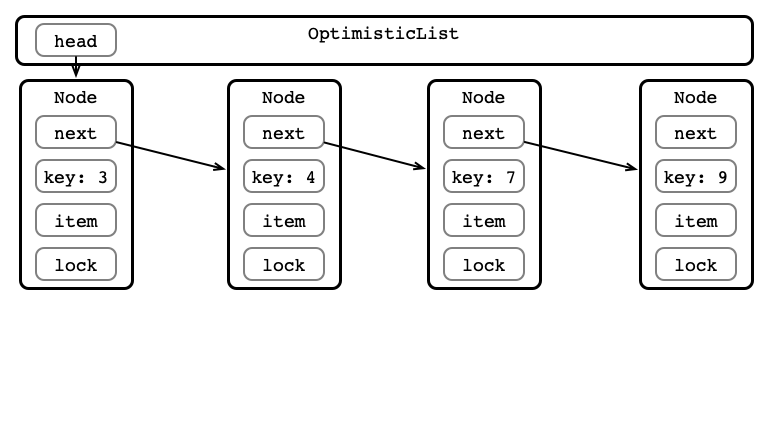

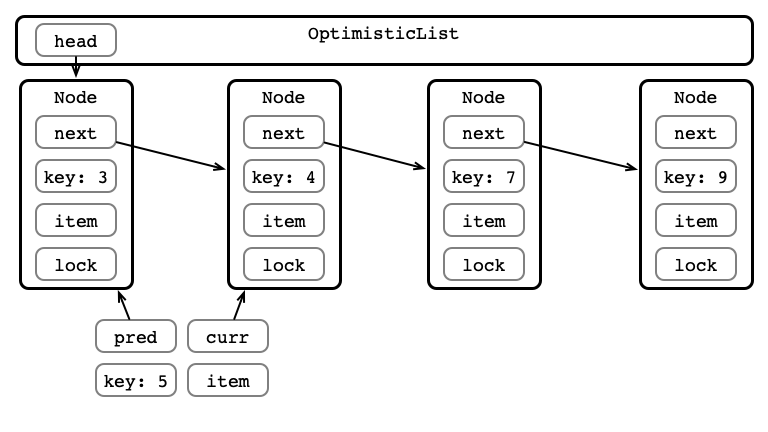

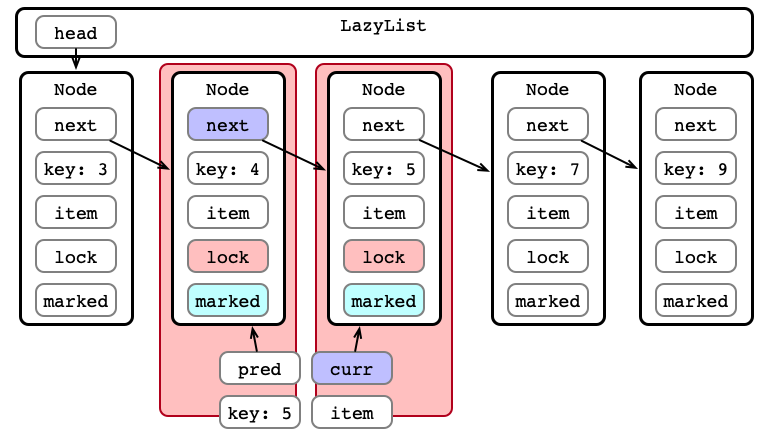

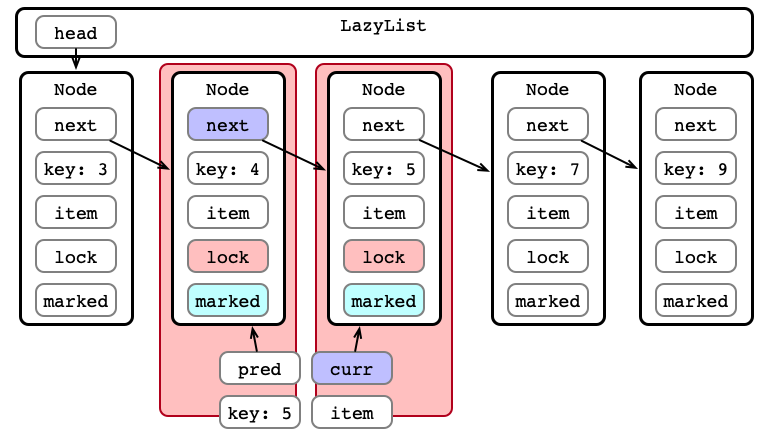

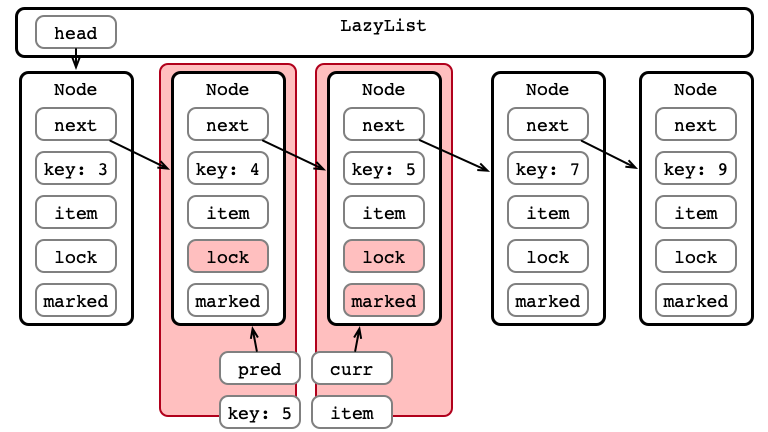

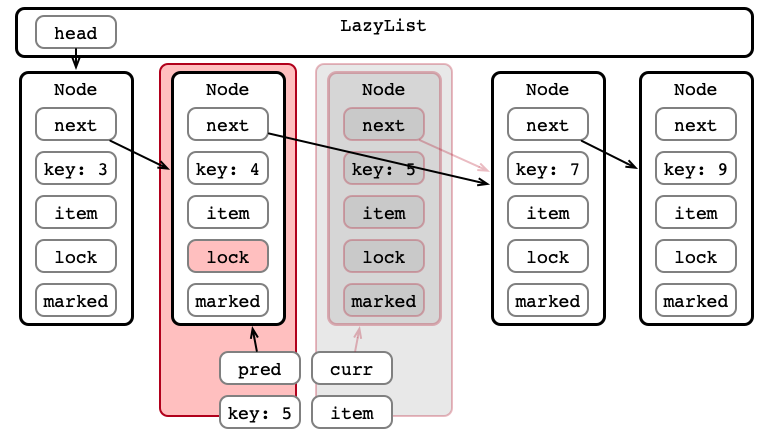

Lazy Removal Illustrated

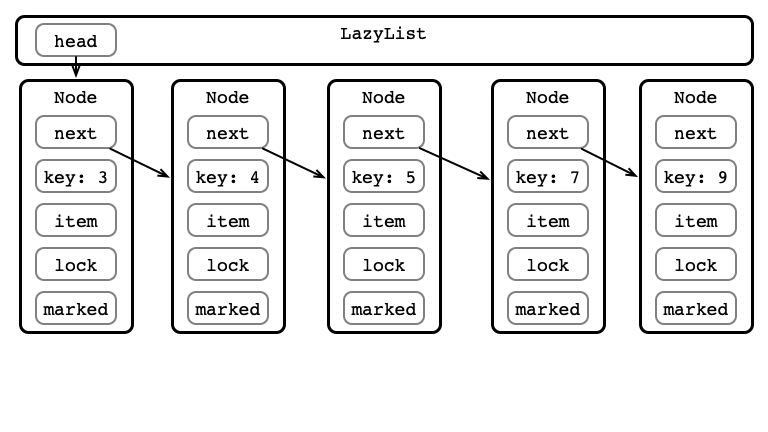

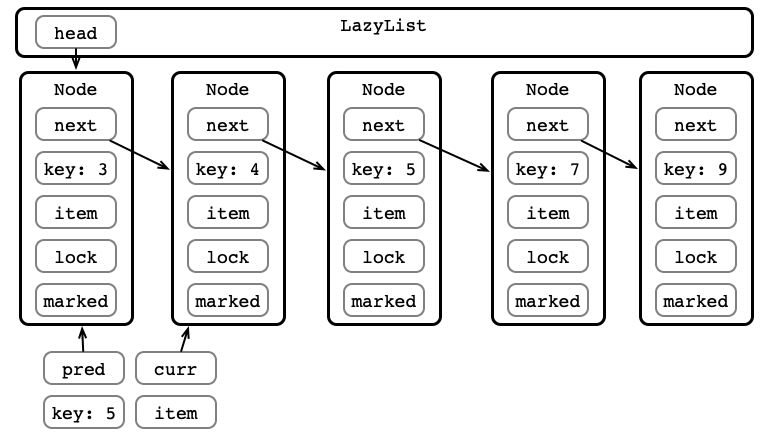

Step 1: Traverse List

Step 1: Traverse List

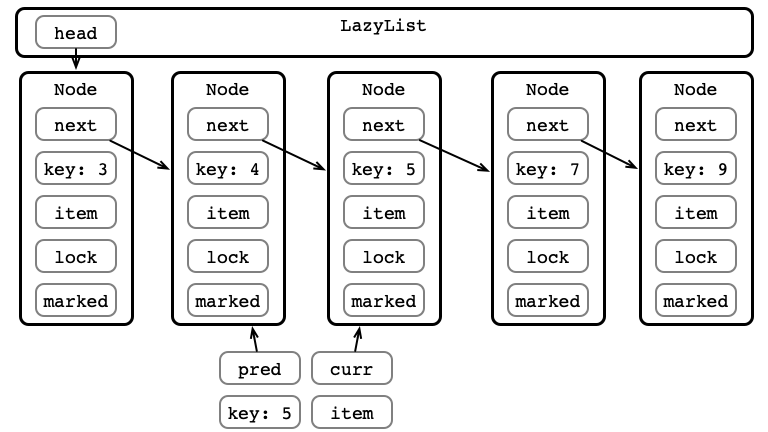

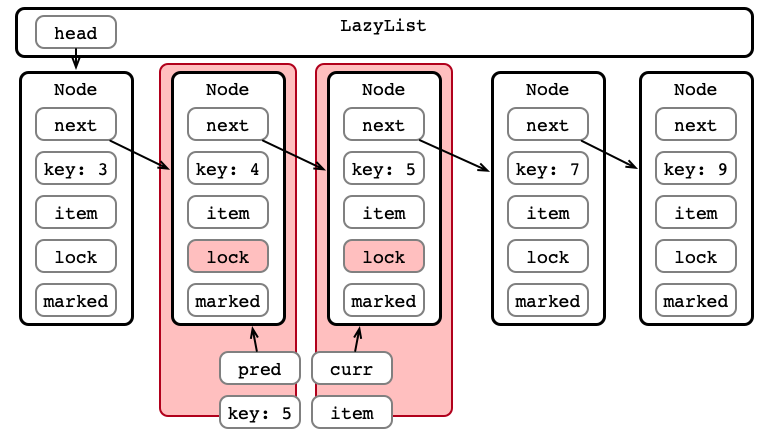

Step 2: Lock Nodes

Step 3: Validate pred.next == curr?

Step 3: Validate not marked?

Step 4a: Perform Logical Removal

Step 4b: Perform Physical Removal

Step 5: Release Locks and Done!

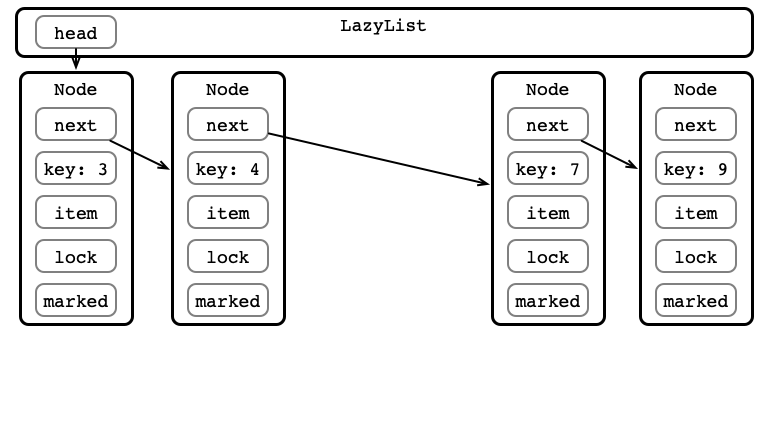

A Node in Code

private class Node {

T item;

int key;

Node next;

Lock lock;

volatile boolean marked;

public Node (int key) {

this.item = null;

this.key = key;

this.next = null;

this.lock = new ReentrantLock();

this.marked = false;

}

public Node (T item) {

this.item = item;

this.key = item.hashCode();

this.next = null;

this.lock = new ReentrantLock();

}

public void lock () {

lock.lock();

}

public void unlock () {

lock.unlock();

}

}

Validation, Simplified

private boolean validate (Node pred, Node curr) {

return !pred.marked && !curr.marked && pred.next == curr;

}

Improvements?

- Limited locking as in optimistic synchronization

- Simpler validation

- faster—no list traversal

- more likely to succeed?

- Logical removal easier to reason about

- linearization point at logical removal line

-

contains()no longer acquires locks- often most frequent operation

- now it is wait-free!

Wait-free Containment

public boolean contains (T item) {

int key = item.hashCode();

Node curr = head;

while (curr.key < key) {

curr = curr.next;

}

return curr.key == key && !curr.marked;

}

Testing Performance!

Lazy Appraisal

Advantages:

- Less locking than fine-grained

- More opportunities for parallelism than coarse-grained

- Simpler validataion than optimistic

- Wait-free

containsmethod

Disadvantages:

- Validation could still fail (though maybe less likely)

- Not starvation-free

- even if locks are starvation-free

-

addandremovestill blocking

What’s next

Can we make all of the operations wait-free?

- A concurrent list without locks?

As Before

To get correctness without locks we need atomics!

AtomicMarkableReference<T>- Stores

- a reference to a

T - a boolean

marked

- a reference to a

- Atomic operations

boolean compareAndSet(T expectedRef, T newRef, boolean expectedMark, boolean newMark)T get(boolean[] marked)T getReference()boolean isMarked()

Nonblocking List Idea

Similar to LazyList

- use

AtomicMarkableReferenceto mark and modify references simultaneously - modifications done by

LazyListcan be done atomically! -

addandremoveare lock-free -

containsis wait-free (hence also lock-free)